Kaggle competition: House price prediction

Keywords: python, PCA, decision trees, random forest, boosting

In this small machine learning project, by using dataset from Kaggle, I created a machine pipeline that can accurately predict the sale prices for houses.

This dataset contains 1460 observations with 79 features. I did following operations:

- Check for missing values and discard columns that contain too much missing information

- Impute missing values in numerical column by using Iterative Imputer

- Convert categorical columns with Onehot Encoder

- Create pipeline to apply imputing, standard scaling and machine learning models

- Test with PCA and without PCA

- Choose best parameters for machine learning models

- Finally apply on the test dataset provided by Kaggle for submission

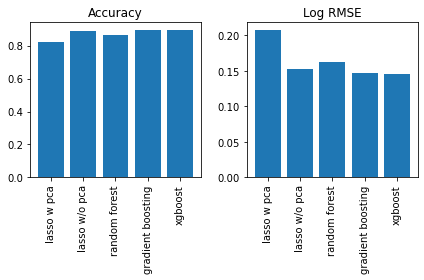

Boosting methods gave better results. Best model in terms of highest accuracy was Gradient Boosting and best model in terms of smallest log RMSE was XGBOOST.

Code can be found in Github.